Multi-Arm Parallel Group Design Explained

What do unconventional arm wrestling and randomized trials have in common?

Each can have many arms.

What is a 3 arm RCT?

Multi arm trials (or multi arm RCTs) are randomized experiments in which individuals are randomly assigned to multiple arms: usually two or more treatment variants, and a control (a 3-arm RCT).

They can be referred to in a number of ways.

- multi-arm trials

- multi-armed trials

- multiarm trials

- multiarmed trials

- multi arm RCTs

- 3-arm, 4-arm, 5-arm, etc RCTs

- multi-factorial design (a type of multi-arm trial)

When I think of a multiarmed wrestling match, I imagine a mess. Can’t you say the same about multiarmed trials?

Quite the contrary. They can become messy, but not if they’re done with forethought and consultation with stakeholders.

I had the great opportunity to be the guest editor of a special issue of Evaluation Review on the topic of Multiarmed Trials, where experts shared their knowledge.

Special Issue: Multi-armed Randomized Control Trials in Evaluation and Policy Analysis

We were fortunate to receive five valuable contributions. I hope the issue will serve as a go-to reference for evaluators who want to explore options beyond the standard two-armed (treatment-control) arrangement.

The first three articles are by pioneers of the method.

- Larry L. Orr and Daniel Gubits: Some Lessons From 50 Years of Multi-armed Public Policy Experiments

- Joseph Newhouse: The Design of the RAND Health Insurance Experiment: A Retrospective

- Judith M. Gueron and Gayle Hamilton: Using Multi-Armed Designs to Test Operating Welfare-to-Work Programs

They cover a wealth of ideas essential for the successful conduct of multi-armed trials.

- Motivations for study design and the choice of treatment variants, and their relationship to real-world policy interests

- The importance of reflecting the complex ecology and political reality of the study context to get stake-holder buy-in and participation

- The importance of patience and deliberation in selecting sites and samples

- The allocation of participants to treatment arms with a view to statistical power

Should I read this special issue before starting my own multi-armed trial?

Absolutely! It’s easy to go wrong with this design, but if done right, it can yield more information than you’d get with a 2-armed trial. Sample allotment matters depending on the question you want to ask. In a 3-armed trial you have to ask yourself a question: Do you want 33.3% of the sample in each of the three conditions (two treatment conditions and control) or 25% in each of the treatment arms and 50% in control? It depends on the contrast and research question. So it requires you to think more deeply about what question it is you want to answer.

This sounds risky. Why would I ever want to run a multi-armed trial?

In short, running a multi-armed trial allows a head-to-head test of alternatives, to determine which provides a larger or more immediate return on investment. It also sets up nicely the question of whether certain alternatives work better with certain beneficiaries.

The next two articles make this clear. One study randomized treatment sites to one of several enhancements to assess the added value of each. The other used a nifty multifactorial design to simultaneously tests several dimensions of a treatment.

- Laura Peck, Hilary Bruck, and Nicole Constance: Insights From the Health Profession Opportunity Grant Program’s Three-Armed, Multi-Site Experiment for Policy Learning and Evaluation Practice

- Randall Juras, Amy Gorman, and Jacob Alex Klerman: Using Behavioral Insights to Market a Workplace Safety Program: Evidence From a Multi-Armed Experiment

More About 3 Arm RCTs

The special issue of Evaluation Review helped motivate the design of a multiarmed trial conducted through the Regional Educational Laboratory (REL) Southwest in partnership with the Arkansas Department of Education (ADE). We co-authored this study through our role on REL Southwest.

In this study with ADE, we randomly assigned 700 Arkansas public elementary schools to one of eight conditions determining how communication was sent to their households about the Reading Initiative for Student Excellence (R.I.S.E.) state literacy website.

The treatments varied on these dimensions.

- Mode of communication (email only or email and text message)

- The presentation of information (no graphic or with a graphic)

- Type of sender (generic sender or known sender)

In January 2022, households with children in these schools were sent three rounds of communications with information about literacy and a link to the R.I.S.E. website. The study examined the impact of these communications on whether parents and guardians clicked the link to visit the website (click rate). We also conducted an exploratory analysis of differences in how long they spent on the website (time on page).

How do you tell the effects apart?

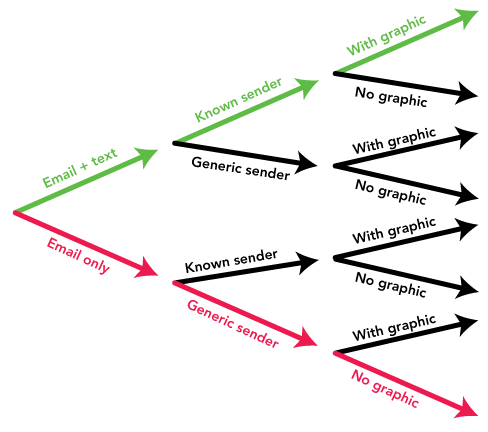

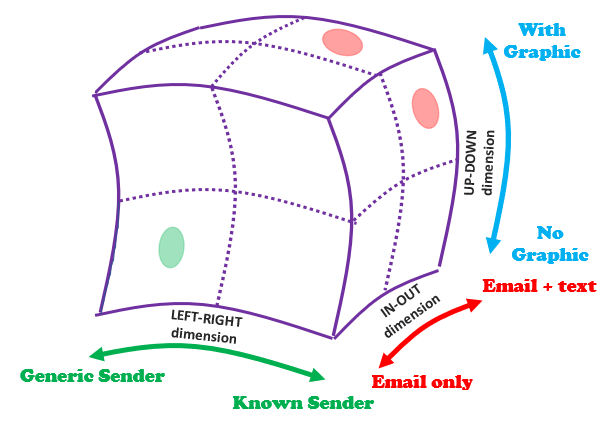

It all falls out nicely if you imagine the conditions as branches, or cells in a cube (both are pictured below).

In the branching representation, there are eight possible pathways from left to right representing the eight conditions.

In the cube representation, the eight conditions correspond to the eight distinct cells.

In the study, we evaluated the impact of each dimension across levels of the other dimensions: for example, whether click rate increases if email is accompanied with text, compared to just email, irrespective of who the sender is or whether the infographic is used.

We also tested the impact on click rates of the “deluxe” version (email + text, with known sender and graphic, which is the green arrow path in the branch diagram [or the red dot cell in the cube diagram]) versus the “plain” version (email only, generic sender, and no graphic, which is the red arrow path in the branch diagram [or green red dot cell in the cube diagram])

That’s all nice and dandy, but have you ever heard of the KISS principle: Keep it Simple Sweetie? You are taking some risks in design, but getting some more information. Is the tradeoff worth it? I’d rather run a series of two-armed trials. I am giving you a last chance to convince me.

Two armed trials will always be the staple approach. But consider the following.

- Knowing what works among educational interventions is a starting point, but it does not go far enough.

- The last 5-10 years have witnessed prioritization of questions and methods for addressing the questions of what work for whom and under which conditions.

- However, even this may not go far enough to get to the question at heart of what people on the ground want to know. We agree with Tony Bryk that practitioners typically want to answer the following question.

What will it take to make it (the program) work for me, for my students, and in my circumstances?

There are plenty of qualitative, quantitative, and mixed methods to address this question. There also are many evaluation frameworks to support systematic inquiry to inform various stakeholders.

We think multi-armed trials help to tease out the complexity in the interactions among treatments and conditions and so help address the more refined question Bryk asks above.

Consider our example above. One question we explored was about how response rates varied across rural schools when compared to urban schools. One might speculate the following.

- Rural schools are smaller, allowing principals to get to know parents more personally

- Rural and non-rural households may have different kinds of usage and connectivity with email versus text and with MMS versus SMS

If these moderating effects matter, then the study, as conducted, may help with customizing communications, or providing a rationale for improving connectivity, and altogether optimizing the costs of communication.

Multi-armed trials, done well, increase the yield of actionable information to support both researcher and on-the-ground stakeholder interests!

Well, thank you for your time. I feel well-armed with information. I’ll keep thinking about this and wrestle with the pros and cons.