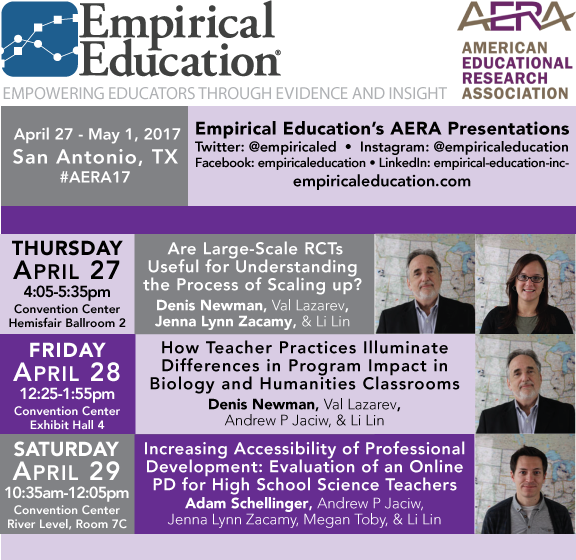

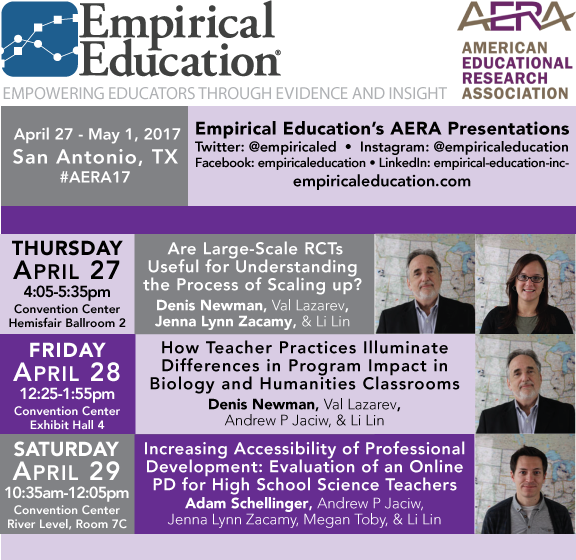

We will again be presenting at the annual meeting of the American Educational Research Association (AERA). Join the Empirical Education team in San Antonio, TX from April 27 – 30, 2017.

Research Presentations will include the following.

Increasing Accessibility of Professional Development (PD): Evaluation of an Online PD for High School Science Teachers

Authors: Adam Schellinger, Andrew P Jaciw, Jenna Lynn Zacamy, Megan Toby, & Li Lin

In Event: Promoting and Measuring STEM Learning

Saturday, April 29 10:35am to 12:05pm

Henry B. Gonzalez Convention Center, River Level, Room 7C

Abstract: This study examines the impact of an online teacher professional development, focused on academic literacy in high school science classes. A one-year randomized control trial measured the impact of Internet-Based Reading Apprenticeship Improving Science Education (iRAISE) on instructional practices and student literacy achievement in 27 schools in Michigan and Pennsylvania. Researchers found a differential impact of iRAISE favoring students with lower incoming achievement (although there was no overall impact of iRAISE on student achievement). Additionally, there were positive impacts on several instructional practices. These findings are consistent with the specific goals of iRAISE: to provide high-quality, accessible online training that improves science teaching. Authors compare these results to previous evaluations of the same intervention delivered through a face-to-face format.

How Teacher Practices Illuminate Differences in Program Impact in Biology and Humanities Classrooms

Authors: Denis Newman, Val Lazarev, Andrew P Jaciw, & Li Lin

In Event: Poster Session 5 - Program Evaluation With a Purpose: Creating Equal Opportunities for Learning in Schools

Friday, April 28 12:25 to 1:55pm

Henry B. Gonzalez Convention Center, Street Level, Stars at Night Ballroom 4

Abstract: This paper reports research to explain the positive impact in a major RCT for students in the classrooms of a subgroup of teachers. Our goal was to understand why there was an impact for science teachers but not for teachers of humanities, i.e., history and English. We have labelled our analysis “moderated mediation” because we start with the finding that the program’s success was moderated by the subject taught by the teacher and then go on to look at the differences in mediation processes depending on the subject being taught. We find that program impact teacher practices differ by mediator (as measured in surveys and observations) and that mediators are differentially associated with student impact based on context.

Are Large-Scale Randomized Controlled Trials Useful for Understanding the Process of Scaling Up?

Authors: Denis Newman, Val Lazarev, Jenna Lynn Zacamy, & Li Lin

In Event: Poster Session 3 - Applied Research in School: Education Policy and School Context

Thursday, April 27 4:05 to 5:35pm

Henry B. Gonzalez Convention Center, Ballroom Level, Hemisfair Ballroom 2

Abstract: This paper reports a large scale program evaluation that included an RCT and a parallel study of 167 schools outside the RCT that provided an opportunity for the study of the growth of a program and compare the two contexts. Teachers in both contexts were surveyed and a large subset of the questions are asked of both scale-up teachers and teachers in the treatment schools of the RCT. We find large differences in the level of commitment to program success in the school. Far less was found in the RCT suggesting that a large scale RCT may not be capturing the processes at play in the scale up of a program.

We look forward to seeing you at our sessions to discuss our research. You can also view our presentation schedule here.