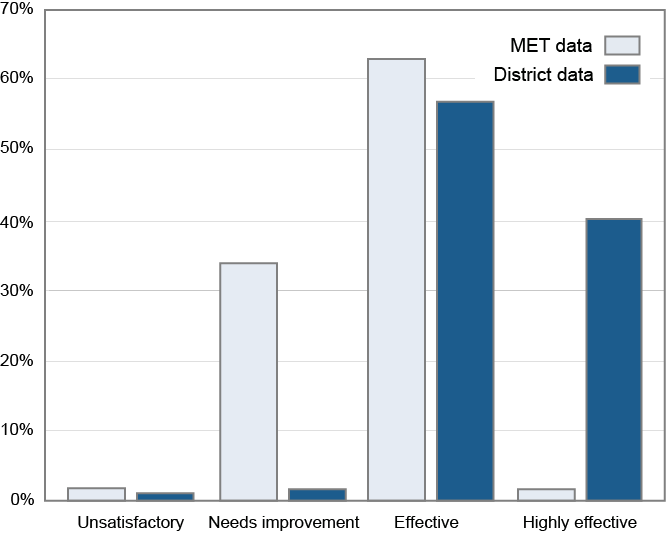

We are all familiar with approaches to combining student growth metrics and other measures to generate a single measure that can be used to rate teachers for the purpose of personnel decisions. For example, as an alternative to using seniority as the basis for reducing the workforce, a school system may want to base such decisions—at least in part—on a ranking based on a number of measures of teacher effectiveness. One of the reports released January 8 by the Measures of Effective Teaching (MET) addressed approaches to creating a composite (i.e., a single number that averages various aspects of teacher performance) from multiple measures such as value-added modeling (VAM) scores, student surveys, and classroom observations. Working with the thousands of data points in the MET longitudinal database, the researchers were able to try out multiple statistical approaches to combining measures. The important recommendation from this research for practitioners is that, while there is no single best way to weight the various measures that are combined in the composite, balancing the weights more evenly tends to increase reliability.

While acknowledging the value of these analyses, we want to take a step back in this commentary. Here we ask whether agencies may sometimes be jumping to the conclusion that a composite is necessary when the individual measures (and even the components of these measures) may have greater utility than the composite for many purposes.

The basic premise behind creating a composite measure is the idea that there is an underlying characteristic that the composite can more or less accurately reflect. The criterion for a good composite is the extent to which the result accurately identifies a stable characteristic of the teacher’s effectiveness.

A problem with this basic premise is that in focusing on the common factor, the aspects of each measure that are unrelated to the common factor get left out—treated as noise in the statistical equation. But, what if observations and student surveys measure things that are unrelated to what the teacher’s students are able to achieve in a single year under her tutelage (the basis for a VAM score)? What if there are distinct domains of teacher expertise that have little relation to VAM scores? By definition, the multifaceted nature of teaching gets reduced to a single value in the composite.

This single value does have a use in decisions that require an unequivocal ranking of teachers, such as some personnel decisions. For most purposes, however, a multifaceted set of measures would be more useful. The single measure has little value for directing professional development, whereas the detailed output of the observation protocols are designed for just that. Consider a principal deciding which teachers to assign as mentors, or a district administrator deciding which teachers to move toward a principalship. Might it be useful, in such cases, to have several characteristics to represent different dimensions of abilities relevant to success in the particular roles?

Instead of collapsing the multitude of data points from achievement, surveys, and observations, consider an approach that makes maximum use of the data points to identify several distinct characteristics. In the usual method for constructing a composite (and in the MET research), the results for each measure (e.g., the survey or observation protocol) are first collapsed into a single number, and then these values are combined into the composite. This approach already obscures a large amount of information. The Tripod student survey provides scores on the seven Cs; an observation framework may have a dozen characteristics; and even VAM scores, usually thought of as a summary number, can be broken down (with some statistical limitations) into success with low-scoring vs. with high-scoring students (or any other demographic category of interest). Analyzing dozens of these data points for each teacher can potentially identify several distinct facets of a teacher’s overall ability. Not all facets will be strongly correlated with VAM scores but may be related to the teacher’s ability to inspire students in subsequent years to take more challenging courses, stay in school, and engage parents in ways that show up years later.

Creating a single composite measure of teaching has value for a range of administrative decisions. However, the mass of teacher data now being collected are only beginning to be tapped for improving teaching and developing schools as learning organizations.