Empirical Education released the final report of a project that has developed a unique perspective on how school systems can use scientific evidence. Representing more than three years of research and development effort, our report describes the startup of six randomized experiments and traces how local agencies decided to undertake the studies and how the resulting information was used. The project was funded by a grant from the Institute of Education Sciences under their program on Education Policy, Finance, and Systems. It started with a straightforward conjecture:

The combination of readily available student data and the greater pressure on school systems to improve productivity through the use of scientific evidence of program effectiveness could lead to a reduction in the cost of rigorous program evaluations and to a rapid increase in the number of such studies conducted internally by school districts.

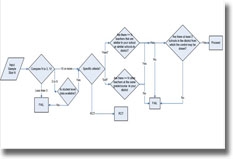

The prevailing view of scientifically based research is that educators are consumers of research conducted by professionals. There is also a belief that rigorous research is extraordinarily expensive. The supposition behind our proposal was that the cost could be made low enough to allow experiments to be conducted routinely to support district decisions with local educators as the producers of evidence. The project contributed a number of methodological, analytic, and reporting approaches with potential to lower costs and make rigorous program evaluation more accessible to district researchers. An important result of the work was bringing to light the differences between conventional research design aimed at broadly generalized conclusions and design aimed at answering a local question, where sampling is restricted to the relevant “unit of decision making” such as a school district with jurisdiction over decisions about instructional or professional development programs. The final report concludes with an understanding of research use at the central office level, whether “data-driven” or “evidence-based” decision making, as a process of moving through stages in which looking for descriptive patterns in the data (i.e., data mining for questions of interest) will precede the use of statistical analysis of differences between and associations among variables of interest using appropriate methods such as HLM. And these will precede the adoption of an experimental research design to isolate causal, moderator, and mediator effects. It is proposed that most districts are not yet prepared to produce and use experimental evidence but would be able to start with useful descriptive exploration of data leading to needs assessment as a first step in a more proactive use of evaluation to inform their decisions.

For a copy of the report, please choose the Toward School Districts Conducting Their Own Rigorous Program Evaluation paper from our reports and papers webpage.