Report Released on the Effectiveness of SRI/CAST's Enhanced Units

Summary of Findings

Empirical Education has released the results of a semester-long randomized experiment on the effectiveness of SRI/CAST’s Enhanced Units (EU). This study was conducted in cooperation with one district in California, and with two districts in Virginia, and was funded through a competitive Investing in Innovation (i3) grant from the U.S. Department of Education. EU combines research-based content enhancement routines, collaboration strategies and technology components for secondary history and biology classes. The goal of the grant is to improve student content learning and higher order reasoning, especially for students with disabilities. EU was developed during a two-year design-based implementation process with teachers and administrators co-designing the units with developers.

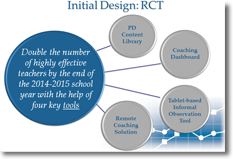

The evaluation employed a group randomized control trial in which classes were randomly assigned within teachers to receive the EU curriculum, or continue with business-as-usual. All teachers were trained in Enhanced Units. Overall, the study involved three districts, five schools, 13 teachers, 14 randomized blocks, and 30 classes (15 in each condition, with 18 in biology and 12 in U.S. History). This was an intent-to-treat design, with impact estimates generated by comparing average student outcomes for classes randomly assigned to the EU group with average student outcomes for classes assigned to control group status, regardless of the level of participation in or teacher implementation of EU instructional approaches after random assignment.

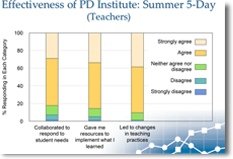

Overall, we found a positive impact of EU on student learning in history, but not on biology or across the two domains combined. Within biology, we found that students experienced greater impact on the Evolution unit than the Ecology unit. These findings supports a theory developed by the program developers that EU works especially well with content that progresses in a sequential and linear way. We also found a positive differential effect favoring students with disabilities, which is an encouraging result given the goal of the grant.

Final Report of CAST Enhanced Units Findings

The full report for this study can be downloaded using the link below.

Dissemination of Findings

2023 Dissemination

In April 2023, The U.S. Department of Education’s Office of Innovation and Early Learning Programs (IELP) within the Office of Elementary and Secondary Education (OESE) compiled cross-project summaries of completed Investing in Innovation (i3) and Education Innovation and Research (EIR) projects. Our CAST Enhanced Units study is included in one of the cross-project summaries. Read the 16-page summary using the link below.

Findings from Projects with a Focus on Serving Students with Disabilities

2020 Dissemination

Hannah D’ Apice presented these findings at the 2020 virtual conference for the Society for Research on Educational Effectiveness (SREE) in September 2020. Watch the recorded presentation using the link below.