Doing Something Truly Original in the Music of Program Evaluation

Is it possible to do something truly original in science?

How about in Quant evaluations in the social sciences?

The operative word here is "truly". I have in mind contributions that are "outside the box".

I would argue that standard Quant provides limited opportunity for originality. Yet, QuantCrit forces us to dig deep to arrive at original solutions - to reinterpret, reconfigure, and in some cases reinvent Quant approaches.

That is, I contend that Quant Crit asks the kinds of questions that force us to go outside the box of conventional assumptions and develop instrumentation and solutions that are broader and better. Yet, I qualify this by saying (and some will disagree), that doing so does not require us to give up the core assumptions that are at the foundation of Quant evaluation methods.

I find that developments and originality in jazz music closely parallel what I have in mind in discussing the evolution of genres in Quant evaluations, and what it means to conceive of and address problems and opportunities outside the box. (You can skip this section, and go straight to the final thoughts, but I would love to share my ideas with you here.)

An Analogy for Originality in the Artistry of Herbie Hancock

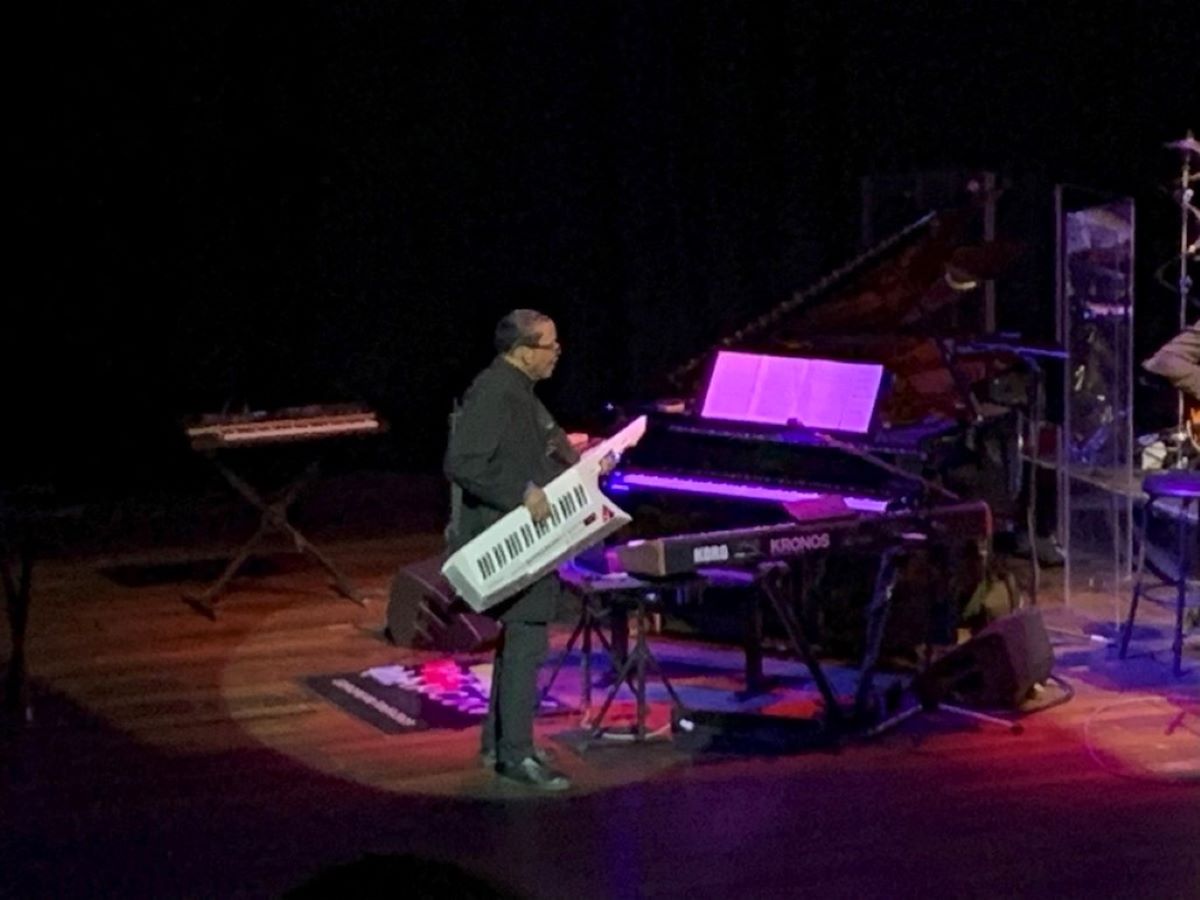

Last week I took my daughter, Maya, to see the legendary keyboardist Herbie Hancock perform live with Lionel Loueke, Terrance Blanchard and others. CHILLS along my spine, is how I would describe it. I found myself fixating on Hancock’s hand movements on the keys, and how he swiveled between the grand piano and the KORG synthesizer, and asking: "the improvisation is on-point all the time – how does he know how to go right there?"

Hancock, winner of an Academy award, and 14 Grammys, is a (if not the) major force in the evolution of jazz through the last 60 years, up to the contemporary scene.

His main start was in the 1960's as the pianist in Miles Davis' Second Great Quintet. (When Hancock was dispirited, Davis famously advised him "don't play the butter notes"). Check out this performance by the band of Wayne Shorter's composition "Footprints" from 1967 – note the symbiosis among the group and Hancocks respectful treatment of the melody.

In the 1970’s Hancock developed styles of jazz fusion and funk with the Headhunters (e.g., Chameleon.

Then in the 1980's Hancock explored electro styles, capped by the song "Rockit" – a smash that straddled jazz, pop and hip-hop. It featured scratch styling and became a mainstay for breakdancing (in upper elementary school I co-created a truly amateurish school play that ended in an ensemble Rockit dance with the best breakdancers in our school). Here's Hancock's Grammy performance.

Below is a picture of Hancock from the other night with the strapped synth popularized through the song Rockit.

Hancock did plenty more besides what I mention here, but I narrowed his contributions to just a couple to help me make my point.

His direction, especially with funk fusion and Rockit, ruffled the feathers of more than a few jazz purists. He did not mind. His response was "I have to be true to myself…it was something that I needed to do….because it takes courage to work outside the box…and yet, that’s where the growth lies”.

He also recognized that the need for progression was not just to satisfy his creative direction, but to keep the audience listening; that is, for the music, jazz, to stay alive and relevant. If someone asserts that "Rockit" was a betrayal of jazz that sacrilegiously crossed over into pop and hip-hop, I would counter argue that it opened up the world of jazz to a whole generation of pop listeners (including me). (I recognize similar developments in the genre-crossing works of recent times by Robert Glasper.)

Hancock is a perfect case study of an artist executing his craft (a) fearlessly, (b) not with the goal of pleasing everyone, (c) with the purpose of connecting with, and reaching new audiences, (d) by being open to alternative influences, (e) to achieve a harmonious melodic fusion (moving between his KORG synth, a grand piano), and (f) with constant appreciation reflection of the roots and fundamentals.

Coming Back to the Idea of the Fusion of Quant with Quant Crit in Program Evaluation

Society today presents us with situations that require critical examination of how we use the instruments on which we are trained, and an audit of the effect they have, both intended and unintended. It also requires that we adapt the applications of methods that we have honed for years. The contemporary situation poses the question: How can we expand the range of what we can do with the instruments on which we are trained, given the solutions that society needs today, recognizing that any application has social ramifications? I have in mind the need to prioritize problems of equity and social and racial justice. How do we look past conventional applications that limit the recognition, articulation, and development of solutions to important and vexing problems in society?

Rather than feeling powerless and overwhelmed, the Quant evaluator is very well positioned to do this work. I greatly appreciate the observation by Frances Stage on this point:

"…as quantitative researchers we are uniquely able to find those contradictions and negative assumptions that exist in quantitative research frames"

This is analogous to saying that a dedicated pianist in classic jazz is very well positioned to expand the progressions and reach harmonies that reflect contemporary opportunities, needs and interests. It may also require the Quant evaluator to expand his/her arrangements and instrumentation.

As Quant researchers and evaluators, we are most familiar with the "rules of playing" that reinforce "the same old song" that needs questioning. Quant Crit can give us the momentum to push the limits of our instruments and apply them in new ways.

In making these points I feel a welcome alignment with Hancock's approach: recognizing the need to break free from cliché and convention, to keep meaningful discussion going, to maximize relevance, to get to the core of evaluation purpose, to reach new audiences and seed/facilitate new collaborations.

Over the next year I'll be posting a few creations, and striking in some new directions, with syncopations and chords that try to maneuver around and through the orthodoxy – "switching up" between the "KORG and the baby grand" so to speak.

Please stay tuned.